Montreal AI Ethics Institute principal researcher Abhishek Gupta shares this view.

“E.g., will synthetic child increase the creation of authentic child ? Will it increase the number of pedophiles’ attacks?” “I worry about other effects of synthetic images of illegal content - that it will exacerbate the illegal behaviors that are portrayed,” Dotan told TechCrunch via email. That bodes poorly for the future of these AI systems, according to Ravit Dotan, VP of responsible AI at Mission Control. A study carried out in 2019 revealed that, of the 90% to 95% of deepfakes that are non-consensual, about 90% are of women. Women, unfortunately, are most likely by far to be the victims of this. Those two capabilities could be risky when combined, allowing bad actors to create pornographic “deepfakes” that - worst-case scenario - might perpetuate abuse or implicate someone in a crime they didn’t commit. (The license for the open source Stable Diffusion prohibits certain applications, like exploiting minors, but the model itself isn’t fettered on the technical level.) Moreover, many don’t have the ability to create art of public figures, unlike Stable Diffusion. Other AI art-generating systems, like OpenAI’s DALL-E 2, have implemented strict filters for pornographic material. Stable Diffusion is very much new territory. However, Safety Classifier - while on by default - can be disabled. One of these mechanisms is an adjustable AI tool, Safety Classifier, included in the overall Stable Diffusion software package that attempts to detect and block offensive or undesirable images.

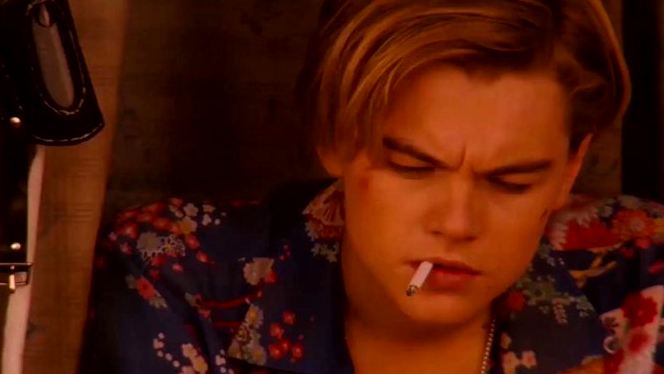

On the infamous discussion board 4chan, where the model leaked early, several threads are dedicated to AI-generated art of nude celebrities and other forms of generated pornography.Įmad Mostaque, the CEO of Stability AI, called it “unfortunate” that the model leaked on 4chan and stressed that the company was working with “leading ethicists and technologies” on safety and other mechanisms around responsible release. Midjourney has launched a beta that taps Stable Diffusion for greater photorealism.īut Stable Diffusion has also been used for less savory purposes.

For example, NovelAI has been experimenting with Stable Diffusion to produce art that can accompany the AI-generated stories created by users on its platform. But the model’s unfiltered nature means not all the use has been completely above board.įor the most part, the use cases have been above board. Stability AI’s Stable Diffusion, high fidelity but capable of being run on off-the-shelf consumer hardware, is now in use by art generator services like Artbreeder, Pixelz.ai and more. A new open source AI image generator capable of producing realistic pictures from any text prompt has seen stunningly swift uptake in its first week.

0 kommentar(er)

0 kommentar(er)